When AI Runs Dry: The Challenge of Training Models on Sparse Medical & Biomechanical Data

Disclaimer: this is an AI-generated article intended to highlight interesting concepts / methods / tools used within the SmartDATA Lab's research. This is for educating lab members as well as general readers interested in the lab. The article may contain errors.

Why building AI for clinics and human movement feels like playing blindfolded chess—and what we can do about it

We all love the idea of AI diagnosing diseases from a single MRI scan or powering exoskeletons that move as naturally as we do. But guess what? These applications often falter because there’s simply not enough data—or the data is imbalanced, messy, and hard to collect. In medicine and biomechanics, training robust AI models is more like playing chess blindfolded: with limited pieces, incomplete vision, and a big risk of making the wrong moves.

The Data Desert

In fields like radiology or motion analysis, gathering high-quality data isn’t just tough—it’s prohibitively expensive and ethically constrained. MRI scans, motion capture sessions, and clinical trials demand time, resources, and informed consent. Most datasets contain dozens of healthy subjects and maybe a handful of patients with the condition of interest—if you’re lucky.

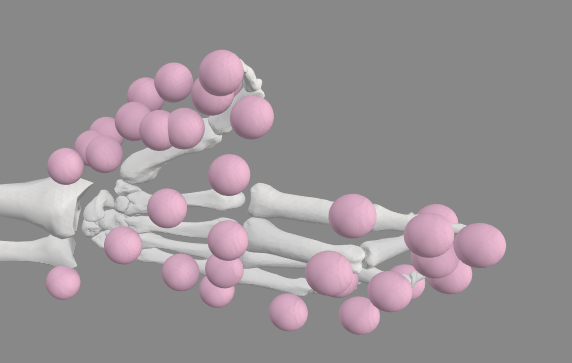

In Lindbeck et al., we trained neural networks to predict biomechanical parameters using pinch-force data and demographic info, but only after synthesizing data from a virtual population of 40,000 subjects. When applied to 10,000 unseen “synthetic” individuals, performance held—but real-world clinical validation remained limited. It showed promise but also revealed how synthetic data can’t fully capture real-world complexity.

Clinical Accessibility ≠ Lab Perfection

Even models that reach scientific heights need to thrive on clinic-ready data. That means training AI to work with simple, easy-to-collect inputs: a smartphone-recorded pinch strength, patient weight, or a 2D radiograph—not high-resolution MRI or full-body marker sets.

In Tappan et al., we demonstrated this by training models to distinguish between healthy and surgically altered wrist biomechanics using easily measured lateral pinch force. Importantly, they used explainable AI (XAI) tools to confirm the model based its decisions on features that align with known physiology—bridging AI predictions and medical interpretability.

Architectures That Embrace Sparsity

When your dataset is tiny or unbalanced, standard deep learning will just overfit. The key is architectural restraint and clever math:

- Transfer learning: In Kearney et al., we pre-trained an LSTM on large, simulated datasets, then fine-tuned it on real experimental data. This reduced torque prediction error by ~25%, showing how even one strong simulation can amplify limited real data.

- Synthetic augmentation: Lindbeck et al. needed thousands of virtual subjects to train their model. Synthetic data, migration via inverse-distance weighting, or generative adversarial networks (GANs) can expand feature space—but also risk introducing simulation bias that models might memorize, not generalize.

The Math Underneath

Training with limited data is all about raising the signal and suppressing the noise. Here’s how math helps:

- Feature extraction: We collect high-dimensional data—force traces, time-series, or sensor arrays. Through dimensionality reduction (e.g., singular value decomposition, principal component analysis), we compress data to its most informative components—highlighting meaningful signals while downplaying noise.

- Covariance estimation: Understanding how features covary (e.g., force rise rate with muscle activation) guides model designs that respect physiological constraints, such as using covariance-weighted regularization to prevent overfitting.

- Transfer learning weight freezing: Linear algebra allows us to freeze parts of pre-trained models—myriad weights—keeping beneficial features from simulations and adapting the remaining layers to clinical data without catastrophic forgetting.

Pitfalls & Nuanced Debates

- Synthetic realism vs. reality gap

Synthetic data is necessary—but without careful simulation to model sensor noise, subject variability, and complex biomechanics, we risk building models tuned to lab elegance, not messy clinical use. - Explainability in high-stakes decisions

Clinicians won’t trust a model unless it says why it made a decision. XAI techniques—like saliency maps or layer-wise relevance—help, but interpreting noisy patterns remains tricky. - Balancing bias and variance

Training on small, unbalanced datasets means decisions about regularization matter. Too little leads to overfit; too much, and meaningful patient differences get lost. - Architectural transparency

Models must be interpretably lean—not sprawling deep nets. Modular architectures that mirror physical processes (e.g., separate torque and fatigue modules) are easier to understand and adapt.

A Roadmap Forward

Clinically accessible inputs—force, basic kinematics, imaging modalities already available in the clinic—must form the data backbone.

Hybrid architectures: Combine transfer learning (simulation → real), XAI for internal validation, and synthetic data augmentation that’s tuned not to mislead.

Transparent metrics: Validate performance not just by accuracy, but by confidence intervals, clinical-relevant error (e.g. torque ± 2 Nm), and XAI concordance scores (how often model emphasis matches physician judgment).

Multidisciplinary collaboration: Engineers simulate; clinicians annotate; mathematicians analyze; data scientists optimize.

Key References on AI with Sparse Biomechanical & Clinical Data

- Lindbeck, E. M., et al. (2023). Predictions of thumb, hand, and arm muscle parameters derived using force measurements of varying complexity and neural networks. Journal of Biomechanics, 161, 111834. https://doi.org/10.1016/j.jbiomech.2023.111834

- Tappan, I., et al. (2024). Explainable AI Elucidates Musculoskeletal Biomechanics: A Case Study Using Wrist Surgeries. Annals of Biomedical Engineering, 52, 498–509. https://doi.org/10.1007/s10439-023-03394-9

- Kearney, K. M., et al. (2024). From Simulation to Reality: Predicting Torque with Fatigue Onset via Transfer Learning. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 32, 3669–3676. https://doi.org/10.1109/TNSRE.2024.3465016

- Diaz, M. T., et al. (2025). Evaluating Recruitment Methods for Selection Bias: A Large, Experimental Study of Hand Biomechanics. Journal of Biomechanics, 112558. https://doi.org/10.1016/j.jbiomech.2025.112558

- Amirian, S., et al. (2023). Explainable AI in Orthopedics: Challenges, Opportunities, and Prospects. arXiv. https://arxiv.org/abs/2308.04696

Developing AI for medicine and biomechanics under data constraints is more than a technical challenge—it’s a test of creativity, collaboration, and humility. By combining synthetic data, careful architecture, and human-aligned explainability, we can build AI that doesn’t just predict—it understands, explains, and helps.

Biomechanics Medicine Personalized Learning Physical Mismatch Synthetic Data Trustworthiness